Wireless and Mobile Networks Lab

Prof. Rung-Hung Gau

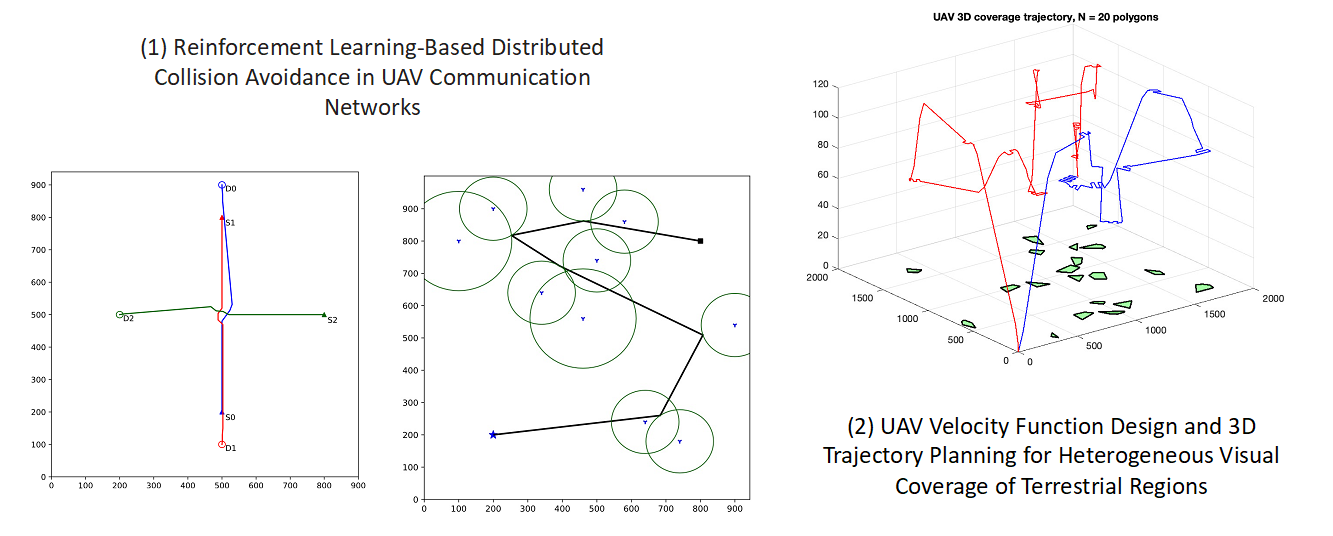

We [2] propose a novel approach of designing the velocity function and the 3D trajectory for a UAV to efficiently attain location-dependent visual coverage. Specifically, the UAV dynamically adjusts its altitude to photograph terrestrial polygons with unequal image resolution requirements. Unlike prior work that assumes the UAV speed is constant, the proposed approach allows the UAV to change its speed. To minimize the task completion time, we put forward a novel approach that is composed of three algorithmic components. The first component uses an aggressive method for selecting the UAV photographing altitudes, designs the UAV velocity functions, and derives the UAV flying times for all pairs of regions. Based on the UAV flying times rather than the distances, the second component utilizes an auxiliary traveling salesman problem to select the visited order of terrestrial regions. For each region, the third component creates candidate coverage paths and chooses the coverage path based on the UAV flying time. Simulation results indicate that the proposed approach outperforms a two-dimensional trajectory planning algorithm and a greedy algorithm for planning a three-dimensional trajectory in terms of the UAV task completion time.

[2] Y.-C. Ko and R.-H. Gau, "UAV Velocity Function Design and Trajectory Planning for Heterogeneous Visual Coverage of Terrestrial Regions," in IEEE Transactions on Mobile Computing, vol. 22, no. 10, pp. 6205-6222, Oct. 2023. (Link)