Li-Chun Wang (M'96 -- SM'06 -- F'11)} received the B.S. degree from National Chiao Tung University, Taiwan, R. O. C. in 1986, the M.S. degree from National Taiwan University in 1988, and the Ms. Sci. and Ph. D. degrees from the Georgia Institute of Technology , Atlanta, in 1995, and 1996, respectively, all in electrical engineering.

From 1990 to 1992, he was with the Telecommunications Laboratories of the Ministry of Transportations and Communications in Taiwan(currently the Telecom Labs of Chunghwa Telecom Co.). In 1995, he was affiliated with Bell Northern Research of Northern Telecom, Inc., Richardson, TX. From 1996 to 2000, he was with AT&T Laboratories, where he was a Senior Technical Staff Member in the Wireless Communications Research Department. In August 2000, he became an associate professor in the Department of Electrical Engineering of National Chiao Tung University in Taiwan and has been promoted to the full professor since 2005.

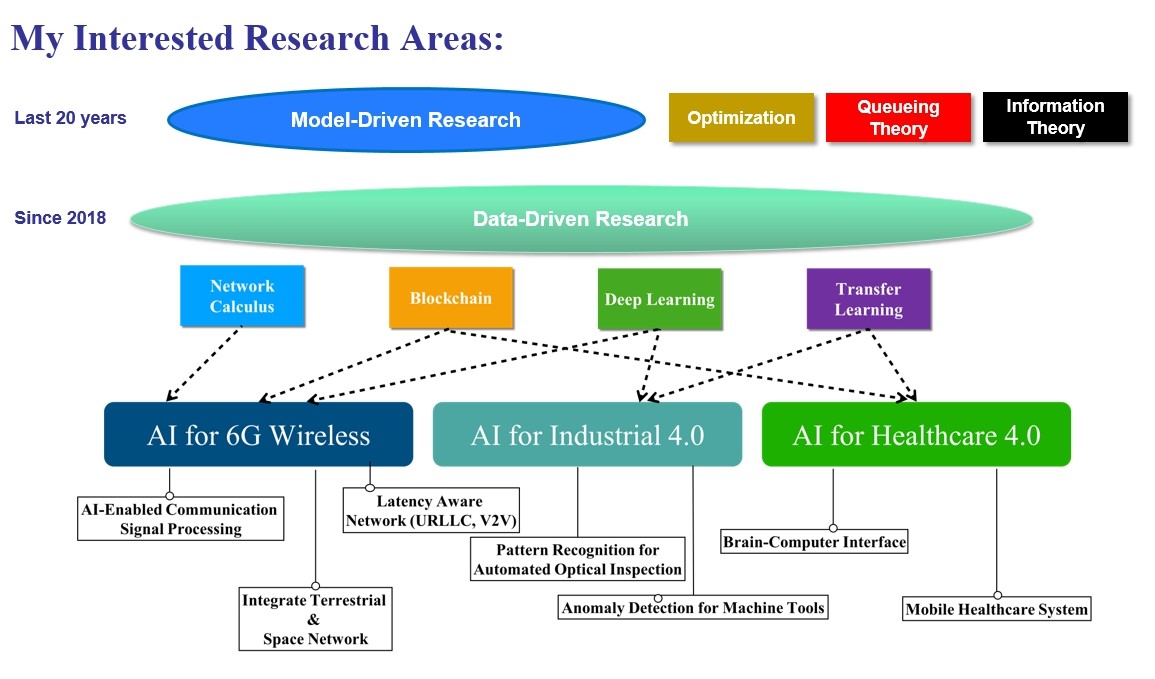

His current research interests are in the areas of radio resource management and cross-layer optimization techniques for wireless systems, heterogeneous wireless network design, and cloud computing for mobile applications.

He was elected to the IEEE Fellow grade in 2011 for his contributions in cellular architectures and radio resource management in wireless networks. Dr. Wang was a co-recipient(with Gordon L. Stuber and Chin-Tau Lea) of the 1997 IEEE Jack Neubauer Best Paper Award for his paper ``Architecture Design, Frequency Planning, and Performance Analysis for a Microcell/Macrocell Overlaying System," IEEE Transactions on Vehicular Technology, vol. 46, no. 4, pp. 836-848, 1997. He has published over 150 journal and international conference papers.

He served as an Associate Editor for the IEEE Trans. on Wireless Communications from 2001 to 2005, the Guest Editor of Special Issue on "Mobile Computing and Networking" for IEEE Journal on Selected Areas in Communications in 2005 and on "Radio Resource Management and Protocol Engineering in Future IEEE Broadband Networks" for IEEE Wireless Communications Magazine in 2006.

He holds nine U.S. patents.

o Ministry of Science and Technology Contract Research Project (2022~2025)

o 36th Japan Tokyo Gold Medal of International Invention Exhibition of Innovative Geniuses (2022)

o Chinese Institute of Engineers [Outstanding Engineering Professor] (2022)

o Information Society of the Republic of China [K.T. Li Fellow Award] (2021)

o Future Science and Technology Award from the Ministry of Science and Technology (2021)

o Outstanding I.T Elite Award (2020)

o Outstanding Research Award from the Ministry of Science and Technology (2018)

o Outstanding Research Award from National Science Council (2012)

o IEEE Fellow (2011)

o IEEE VTS Distinguished Lecture (2011~2013)

o Outstanding Electrical Engineering Award from Chinese Institute of Electrical Engineering (2009)

o 2022 Best Paper Award at the 26th Mobile Computing Symposium and the 17th Wireless and Pervasive Computing Conference

o IEEE Wireless and Optical Communications Conference (WOCC) 30th Best Paper Award (2021)

o Best Paper Award in the Network Academic Excellence Category at the National Telecommunications Symposium (2020)

o MC2019 Outstanding Paper Award at the 24th Mobile Computing Symposium (2019)

o IEEE Wireless and Optical Communications Conference

o (WOCC)27th Best Paper Award (2018)

o Best Paper Award at the National Telecommunications Conference (2018)

o IEEE Communications Society Asia-Pacific Board Best Paper Award (2016)

oTaiwan Cloud Calculation Council Best Paper Award (2014)

o 11th Y.Z Hsu Science Award (2013)

o 2013 Best Paper Award from National Telecommunications Conference

o IEEE VTS Jack Neubauer Memorial Best Paper Award (1997)

o 編著「寫給高中生的-電機科技.做中學」(交大出版社, 2013),獲得文化部推薦 [中小學生優良課外讀物推介評選] (2013)

o 2009 IEEE MGA Outstanding Large Section Award (served as a Treasurer from 2009 to 2010)

o 2009 IEEE Information Theory Society Chapter of the Year Award (served as Chapter Chair from 2007 to 2009)

o Ph.D. in Electrical Engineering, Georgia Institute of Technology, Atlanta, Gerogia, 1996

o M.Sci. in Electrical Engineering, Georgia Institute of Technology, Atlanta, Gerogia, 1995

o M.S. in Electrical Engineering, National Taiwan University, Taipei, Taiwan, 1988

o B.S. in Electrical Engineering, National Chiao-Tung University, Hsing-Chu, Taiwan, 1986

The 6G wireless communication network is regarded as a new generation integrating artificial intelligence (AI) and the Internet of Things (IoT). Through the application of AI, wireless communication technology is expected to extend widely across various application domains, enabling interconnectivity among mobile devices, small base stations, vehicles, and more. Our team focuses on three prominent research topics in future 6G wireless communication: AI-based wireless signal processing, aerial communication networks for unmanned aerial vehicles (UAVs), and low-latency networks.

Unlike the network architecture of 4G and 5G mobile communication systems, future 6G wireless communication requires a flexible framework capable of supporting diverse communication applications. Dynamic adjustments of system parameters in dynamic environments to achieve optimization pose significant challenges within traditional model-based communication network frameworks. AI is expected to efficiently address these challenges, endowing communication systems with learning capabilities to dynamically configure optimal system parameters. This is particularly valuable in technologies such as radio resource management, network resource allocation, and mobility management, where substantial improvements in efficiency can be achieved. While machine learning has matured in the field of wireless communication, there is still a lack of optimized models designed specifically for wireless communication systems across different application scenarios. Therefore, AI in 6G wireless communication represents a valuable and impactful research topic.

On the other hand, communication networks for unmanned aerial vehicles (UAVs) represent a potential research topic in 6G wireless communication. Faced with the various emerging applications of UAVs such as aerial inspections, aerial logistics transportation, and airborne communication base stations, UAVs need to possess advanced communication networking capabilities to unload aerial and flight data in real-time to ground stations. Designing a communication network system that meets the requirements of high-bandwidth transmission and high reliability based on the communication applications of UAVs is an important challenge.

Furthermore, addressing issues such as energy management, data privacy, and flight safety in UAV networks using AI is also a significant and less-explored area of research. Lastly, with the development of Internet of Things (IoT) technology, applications such as connected vehicles and UAV networks have gained considerable attention. To achieve low-latency and high-reliability data transmission in communication network architectures for these applications, low-latency networks and edge computing technologies are also hot topics in 6G wireless communication.

With the rise of Industry 4.0, the future of 6G also involves important discussions on smart factories. In recent years, the rapid evolution of machine learning and deep learning algorithms has made the use of large amounts of machine data to train models the mainstream approach for implementing smart manufacturing. The goals include: (1)Real-time monitoring of production quality. (2)Predicting imminent machine failures.(3) Reducing production costs In industries such as the machine tool industry, which is one of the mainstream sectors in Taiwan's industrial landscape, components like high-speed rotating ball bearings and gears inside turbines operate continuously 24 hours a day. These are some of the most susceptible parts to wear and tear, and if maintenance is only performed after a failure, it can result in significant losses for the factory. These losses include the cost of replacing the parts, the overall production line downtime, and the cost of producing defective products due to malfunctioning parts. To effectively reduce losses caused by machine damage, factories aim to achieve predictive maintenance through Prognostic and Health Management (PHM). To implement AI-based predictive maintenance models, sufficient abnormal data is required to train a reliable model. However, obtaining abnormal data in the factory is challenging. It involves deploying numerous sensors on the machines and setting up industrial computers to continuously receive sensor data. Additionally, most of the time, machines operate under normal conditions, and abnormalities may only occur after several years of operation. Consequently, collecting abnormal data from machines is challenging, time-consuming, and often requires years of operation to accumulate enough relevant information.

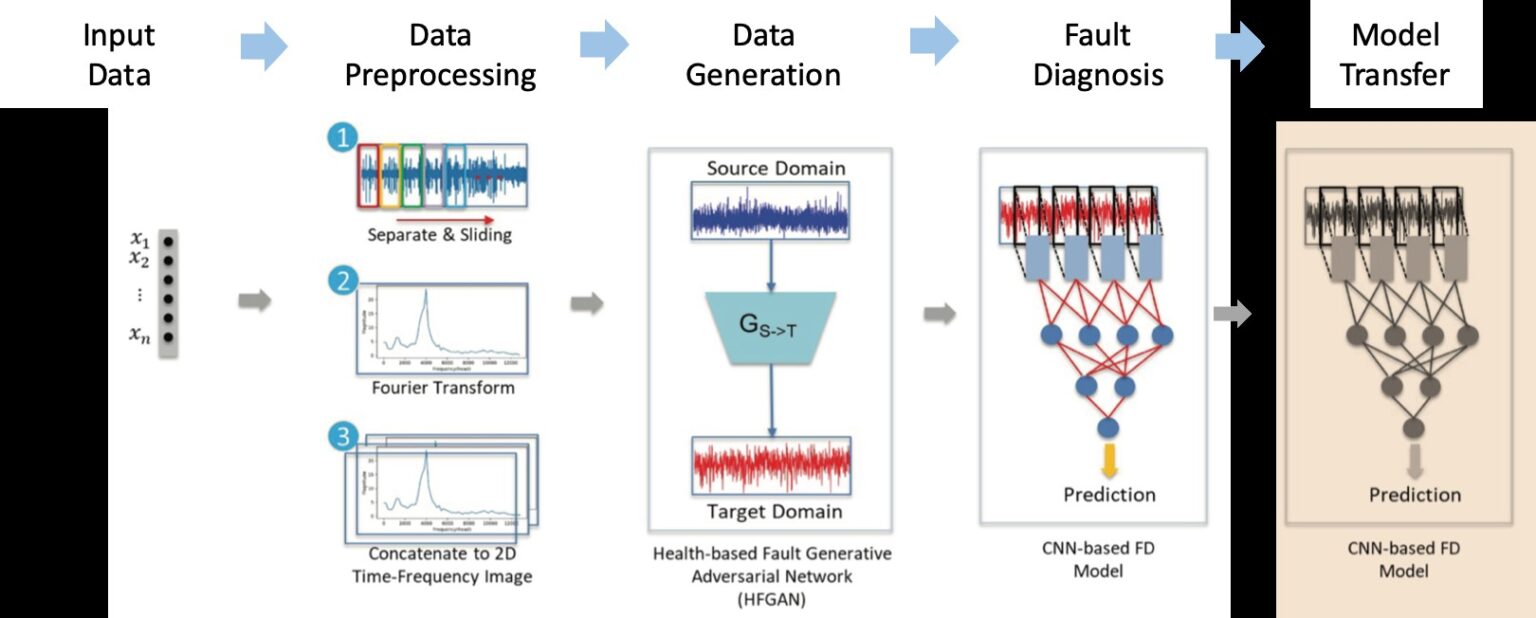

To address the aforementioned challenges, our team plans to employ Artificial Intelligence techniques, specifically Generative Adversarial Networks (GANs) and Transfer Learning, for the generation of abnormal data and model domain transformation. The proposed framework for abnormal diagnosis is outlined in the diagram below.

Data Preprocessing Module: The collected sensor data undergoes preprocessing before being used for training or analysis. This preprocessing includes several steps such as windowing for continuous vibration data and performing Fourier Transform to convert the data into the frequency domain.

Data Generation Module: Since abnormal data is often scarce compared to normal data, the focus of the research in the data generation module is on utilizing a large amount of healthy data to generate abnormal data using Generative Adversarial Network (GAN) technology. This involves creating synthetic instances of various types of anomalies, presenting a significant research challenge.

Establishment of Anomaly Diagnosis Model: Once a substantial amount of abnormal data is generated, the construction of the anomaly diagnosis model can proceed. This can be achieved using neural network architectures such as Recurrent Neural Network (RNN) or Convolutional Neural Network (CNN) for network modeling.

Model Transformation: After building a model for a specific type of machine, the next challenge is scalability to diverse machines within a factory. It is impractical to build models for each machine individually. Fortunately, similar types of machines often exhibit similar operational patterns and share common characteristics in abnormal situations. Transfer Learning techniques can be employed to address this issue.

AI for Health 4.0

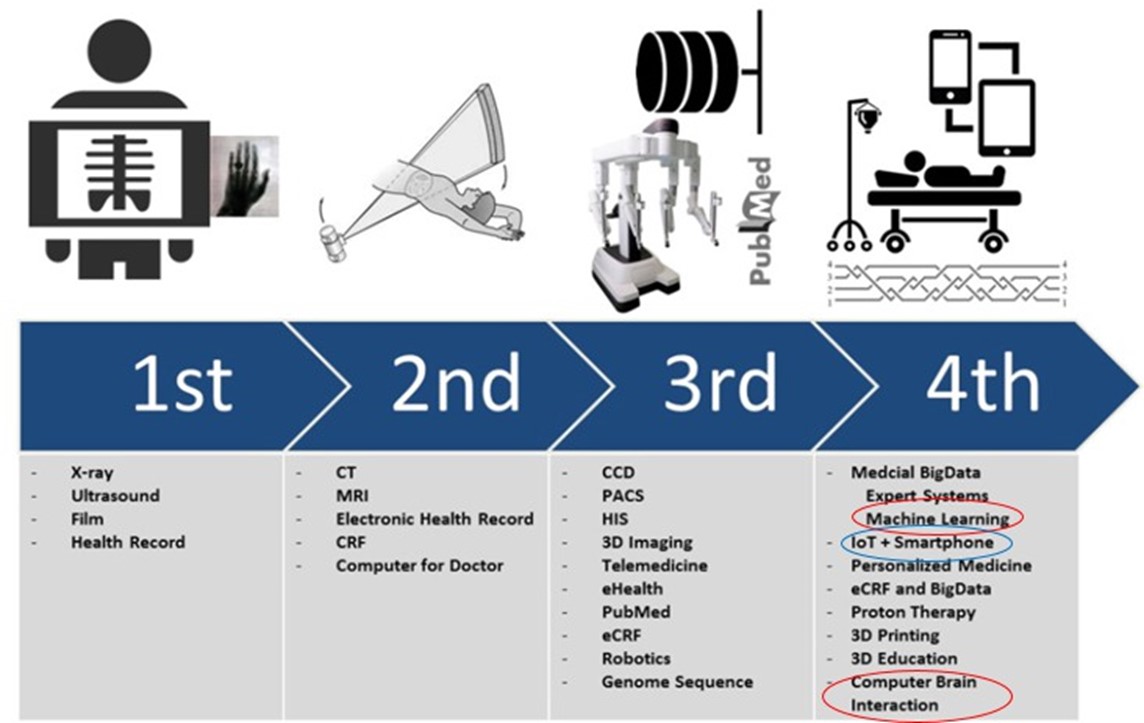

The concept of smart healthcare aims to make it more convenient and efficient for people to maintain and care for the health of themselves and their family members. It involves using smart terminal devices and wearable devices to measure physiological data and information generated by individuals. Through the analysis of physiological characteristics recognition and behavior characteristics identification using artificial intelligence, the goal is to monitor and even predict a person's health condition, thereby providing preventive medical care. In the era of Healthcare 4.0, there are numerous opportunities for information and communication technologies, as illustrated in the diagram below.

The population of native Mandarin Chinese speakers is the largest in the world, accounting for approximately 14% (around 960,000,000 people). However, most past studies on brainwave language analysis have been based on English. Mandarin Chinese and English have different mechanisms in terms of phonetics, with Mandarin Chinese having four tones and a neutral tone, which English lacks. Additionally, Mandarin Chinese is a monosyllabic language with over a thousand individual sounds, but a vast number of commonly used characters, exceeding ten thousand. Each character carries its own meaning, and combinations of characters form an immense variety of words. In essence, Mandarin Chinese utilizes over a thousand sounds to create a language with countless variations. The primary challenges in Mandarin Chinese phonetics involve handling the relationships between "sounds" and "characters." Mandarin Chinese presents a more complex linguistic context compared to English. Therefore, conducting brainwave research based on Mandarin Chinese is a worthwhile and profound subject.

Artificial intelligence employs deep learning neural networks as its framework to capture features among data, enabling the discovery of associations that may elude human detection. In recent years, the acoustic models used in speech recognition have evolved to incorporate Deep Neural Network (DNN) technology, replacing traditional Gaussian Mixture Models (GMMs) for improved performance. This development highlights the potential for advancing brainwave research by leveraging deep learning techniques to analyze the correlation between brainwaves and Mandarin Chinese speech, leading to a significant step forward in this field.

Therefore, our research team focuses on using deep learning techniques to analyze EEG and fEMG signals in the realm of AI for Health 4.0. We integrate past research findings to establish a speech recognition model, achieving real-time analysis and recognition of Mandarin Chinese speech based on non-invasive brainwave signals. The system involves a non-intrusive brain-machine interface that uses classifiers to interpret brain activities. However, since individual brains develop differently, and each person has unique neurostructures, the same brain activity may manifest through different brainwave characteristics. Therefore, when applying classifiers to a new user, the accuracy significantly decreases due to these individual variations. To address this challenge, our research team plans to propose a transfer learning-based framework that leverages data transfer. This framework aims to search for suitable training data in the database based on task-agnostic brainwave data. By achieving transfer learning, the research outcomes can extend the application of a Mandarin Chinese speech recognition system developed for one person to a broader audience. This advancement could pave the way for the development of mobile brainwave speech recognition devices, offering assistance to a more extensive range of individuals, including those with speech disorders.

KEY TECHNOLOGIES FOR 5G WIRELESS SYSTEMS, Cambridge University Press, 2017 (ISBN: 978-1-107-17241-8), edited by Vincent Wong, Robert Schober, Derrick W. K. Ng, and Li-Chun Wang.

王蒞君、劉奕蘭(2020)。化鏡為窗:大數據分析強化大學競爭力。新竹市:交通大學出版社 (ISBN 13:978-957-8614-41-3)

Ying Loong Lee, Allyson Gek Hong Sim, Li-Chun Wang, Teong Chee Chuah, “Blockchain for 6G-Enabled Network-Based Applications,” Chap. 29 in Blockchain and Artificial Intelligence–Based Radio Access Network Slicing for 6G Networks (CRC Press 2022).

Li-Chun Wang and Yu-Jia Chen, “Big Data Help SDN to Detect Intrusions and Secure Data Flows” 19 in Big-Data and Software Defined Networking (IET, 2017).

Li-Chun Wang and Chu-Jung Yeh, “Antenna Architectures for Network MIMO,” Chap. 19 in Cooperative Cellular Wireless Networks, (edited by Ekram Hossiam, Vijay Bhargava, and Kim) published by Cambridge, 2011.

Jane-Hwa Huang, Li-Chun Wang, and Chung-Ju Chang, “Scalable Wireless Mesh Network Architectures with QoS Provisioning (Invited Chapter),” Quality of Service Architectures for Wireless Networks: Performance Metrics and Management, (edited by Drs. Sasan Adib, Raj Jain, Tom Tofigh, and Shyam Parekh, published by IGI Global, 2010).

Jane-Hwa Huang, Li-Chun Wang, and Chung-Ju Chang, “Architectures and Deployment Strategies for Wireless Mesh Networks,” Chap. 2, 29-56, Wireless Mesh Networks: Architectures and Protocols, (edited by Ekram Hossain and Kin K. Leung, published by Springer, Nov. 2007. ISBN: 978-0-387-68838-1).

Li-Chun Wang, “Link Adaptation for Carrier Sense Multiple Access Based Wireless Local Area Networks.” ADAPTATION TECHNIQUES IN WIRELESS MULTIMEDIA NETWORKS, (edited by Drs. Wei Li and Yang Xiao), Nova Science Publishers, 2007 (ISBN:1-59454-883-8).

Jane-Hwa Huang, Li-Chun Wang, and Chung-Ju Chang, “Scalability in Wireless Mesh Networks,” Chap. 7, pp. 225-262, Wireless Mesh Networking: Architectures, Protocols And Standards, (edited by Drs. Yan Zhang, Jijun Luo, and Honglin Hu, published by Auerbach Publications, CRC Press, Dec. 2006. ISBN: 978-0-849-37399-2).

Li-Chun Wang, “Power Allocation Mechanisms for Downlink Soft Handoff in the WCDMA System,” Chap. 19, DESIGN AND ANALYSIS OF WIRELESS NETWORKS, (edited by Drs. Yi Pan and Yang Xiao), Nova Science Publishers, 2005 (ISBN:1-59454-186-8).